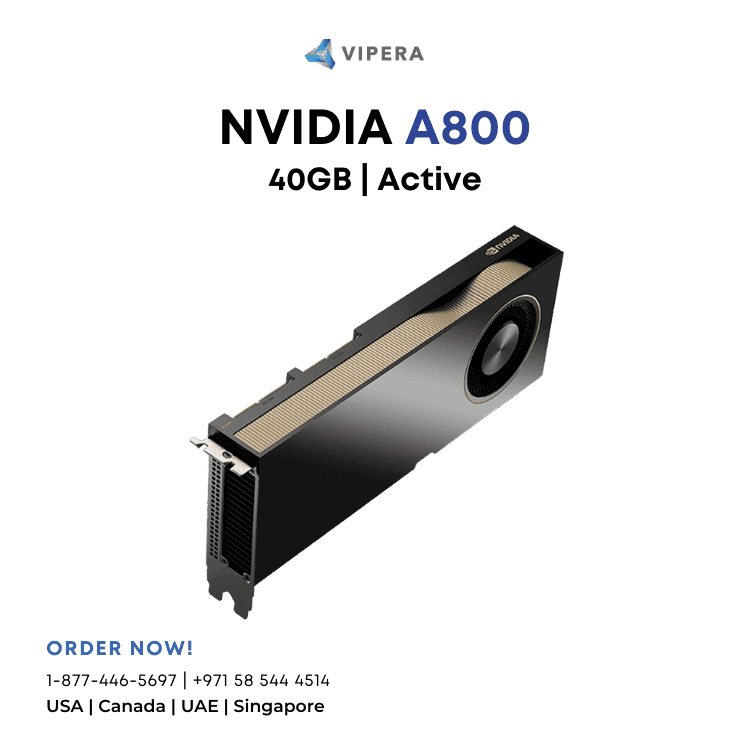

About Our Enterprise and

AI GPUs

Viperatech’s

enterprise GPU

selection delivers advanced

AI graphics card

solutions designed for demanding workloads, such as deep learning,

high-performance computing, and large-scale inference. Whether you’re

training complex models or deploying AI at scale, our

AI GPUs

offer unmatched compute power, reliability, and efficiency. As a trusted

partner, we specialize in Intel and

NVIDIA enterprise GPU

offerings that drive innovation across data centers, research institutions,

and development pipelines.

Why Choose Our

AI Graphics Card Solutions?

✓

Maximum AI Performance:

Our GPUs are built to sustain high utilization while handling massive parallel compute workloads, ensuring your AI infrastructure remains fast, efficient, and ready for tomorrow’s challenges.

✓

Reliability and Durability:

Engineered for 24/7 operation, these systems feature optimized cooling, power distribution, and enterprise-grade components — minimizing downtime and increasing ROI.

✓

Scalability for Every Environment:

From an individual workstation GPU to multi-node clusters, we offer solutions that scale gracefully and integrate seamlessly into enterprise deployments.

✓

Expert Support and Integration:

Our team guides you from selection to deployment and maintenance, turning complex AI infrastructure into dependable, high-performing systems.