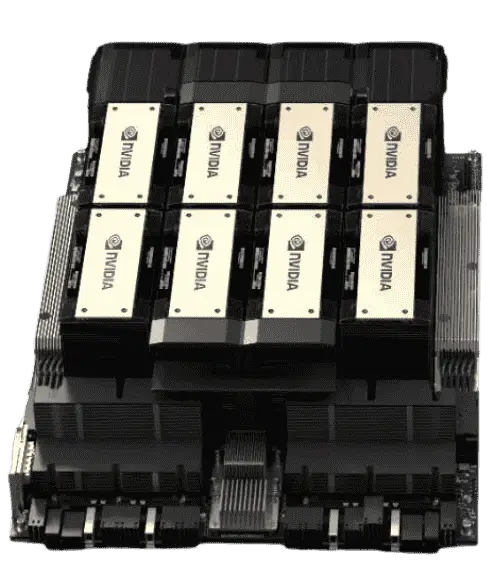

✔ Form Factor: H200 SXM1

✔ FP64: 34 TFLOPS

✔ FP64 Tensor Core: 67 TFLOPS

✔ FP32: 67 TFLOPS

✔ TF32 Tensor Core: 989 TFLOPS

✔ BFLOAT16 Tensor Core: 1,979 TFLOPS

✔ FP16 Tensor Core: 1,979 TFLOPS

✔ FP8 Tensor Core: 3,958 TFLOPS

✔ INT8 Tensor Core: 3,958 TFLOPS

✔ GPU Memory: 141GB

✔ GPU Memory Bandwidth: 4.8TB/s

✔ Decoders: 7 NVDEC, 7 JPEG

✔ Max Thermal Design Power (TDP): Up to 700W each card (configurable)

✔ Multi-Instance GPUs: Up to 7 MIGs @16.5GB each

✔ Interconnect: NVIDIA NVLink®: > 900GB/s, PCIe Gen5: 128GB/s

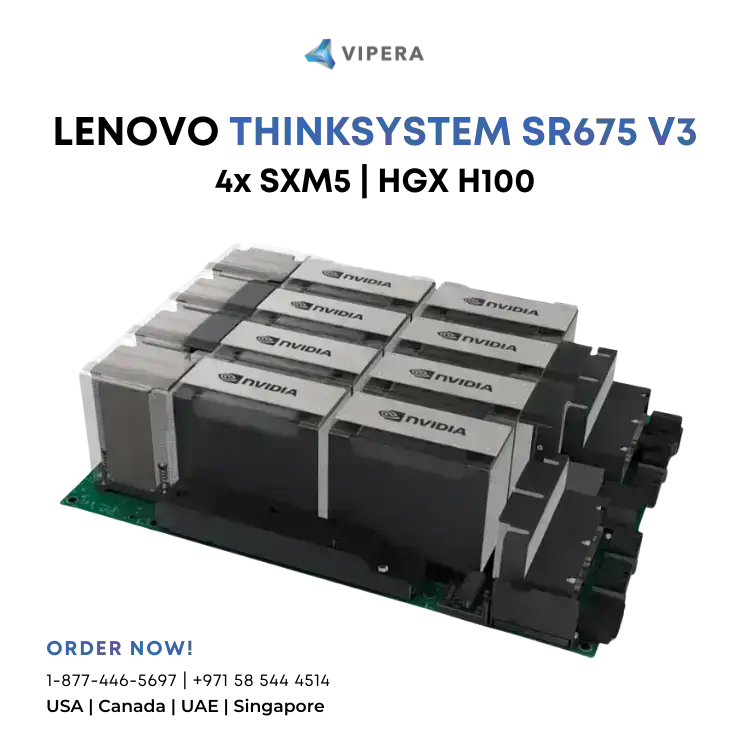

✔ Server Options: NVIDIA HGX™ H200 partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs, NVIDIA AI Enterprise Add-on

✔ Cooling: Liquid Closed Loop with Thermal Heatsinks

✔ Warranty: 3 years return-to-base repair or replace

Expected delivery in late December, 2024. All sales final. No returns or cancellations. For bulk inquiries, consult a live chat agent or call our toll-free number.