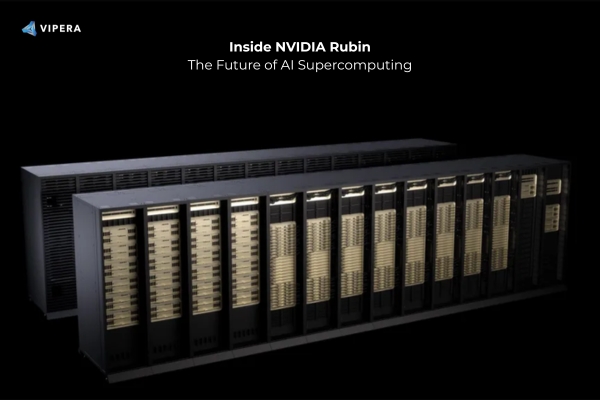

In January 2026, NVIDIA unveiled the Rubin platform a radical shift in how AI is built, scaled, and deployed. Unlike traditional hardware upgrades that focus on individual chips, Rubin represents a full-system rethinking: compute, memory,

networking, security, and software are co-designed together to power what NVIDIA calls the next era of AI factories always-on systems engineered to convert data into intelligence continuously and efficiently.

What Is the Rubin Platform?

At its core, Rubin isn’t just one product, it’s a rack-scale AI supercomputer architecture designed for:

✅ Massively scaled reasoning and inference

✅ Sustained throughput across training and inference

✅ Predictable performance and cost-efficient operation

✅ Enterprise-grade reliability, availability, and security

NVIDIA’s approach redefines the data center from a collection of servers into a coherent AI system where hardware and software interoperate at scale.

Deep Dive: Rubin Platform, Individual Chip Specs & System Capabilities

The Rubin platform isn’t a single chip, it’s a rack-scale AI supercomputer architecture built around six purpose-built silicon components, designed from the ground up to work together for next-gen AI workloads.

1. NVIDIA Vera CPU

Role: Host, orchestrator, and data movement engine for AI factories

Cores: 88 custom NVIDIA Olympus cores with 176 threads using spatial multithreading

Memory: Up to 1.5 TB of ultrahigh-bandwidth system memory (LPDDR5X)

NVLink-C2C Bandwidth: ~1.8 TB/s for high-speed CPU↔GPU communication

Why it matters: Traditional CPUs can bottleneck AI training and inference. Vera is optimized to sustain GPU utilization by feeding data and coordinating memory across the entire rack with minimal latency essential in large-scale training, long

context inference, and agentic AI.

2. NVIDIA Rubin GPU

Role: Primary AI compute engine

Transformer Engine: 3rd-generation with hardware-accelerated adaptive compression

Peak Compute: ~50 petaflops of NVFP4 AI performance per GPU

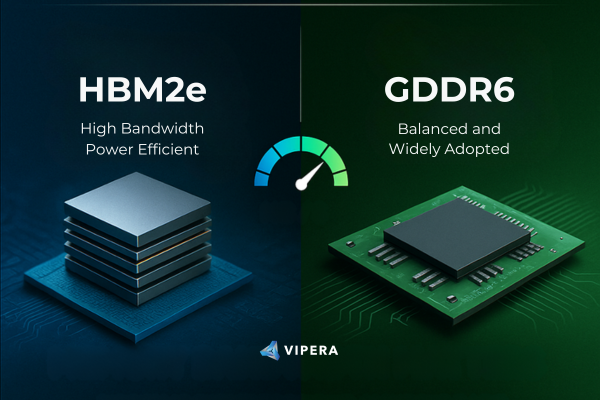

Memory: HBM4 next-gen high-bandwidth memory

Why it matters: The Rubin GPU delivers massive AI throughput and is tailored for large transformer-style models, inference with long sequences, and generative tasks at scale.

3. NVLink 6 Switch

Role: High-speed GPU-to-GPU interconnect

GPU Bandwidth: ~3.6 TB/s bidirectional per GP

Rack-Scale Bandwidth: ~260 TB/s in NVL72 systems

Why it matters: For multi-GPU training and reasoning, raw compute isn’t enough GPUs must efficiently share data. NVLink 6 enables near-memory-local communication across dozens (or eventually hundreds) of GPUs without the overhead of

traditional networking fabrics.

4. NVIDIA ConnectX-9 SuperNIC

Role: Smart high-bandwidth network interface for servers

Per-GPU Bandwidth: ~1.6 Tb/s

Capabilities: Ethernet + InfiniBand compatibility, programmable networking

Why it matters: ConnectX-9 doesn’t just carry data, it accelerates network tasks, offloads CPU work, and enables faster distributed training/inference workflows across racks.

5. NVIDIA BlueField-4 DPU

Role: Infrastructure and security offload processor

Functions:

- AI-native storage via Inference Context Memory Storage

- Isolation and confidential computing security (ASTRA)

- Telemetry, congestion control, and job management

Why it matters: Modern AI workloads need massive shared caches and secure multi-tenant isolation. BlueField-4 brings those features without consuming precious GPU cycles.

6. NVIDIA Spectrum-6 Ethernet Switch

Role: Backbone for large-scale Ethernet networking

Improvements:

- ~5× better power efficiency vs prior generations

- More reliable, longer uptime fabrics

- Supports Spectrum-X Ethernet fabrics that can span multiple data centers

Why it matters: High-performance Ethernet makes it easier to scale AI clusters across racks and even multiple facilities without the cost and complexity of specialized fabrics.

Systems Built on Rubin

NVL72

The flagship rack-scale system that combines:

- 72 Rubin GPUs

- 36 Vera CPUs

- NVLink 6 interconnect spine

- ConnectX-9, BlueField-4, Spectrum-6 fabrics

The entire rack behaves as a coherent AI supercomputer not just 72 separate servers.

What Rubin Means for AI Infrastructure

Rubin isn’t just faster, its architectural innovations shift how AI systems are built and used at scale.

1. Up to 10× Lower Inference Token Cost

Rubin’s codesigned compute + networking reduces the cost per token processed by up to ten times compared to NVIDIA’s previous Blackwell generation. That’s a big deal for inference workloads, where cost per token directly affects the economics

of production AI services.

2. 4× Fewer GPUs for MoE Training

Mixture-of-Experts (MoE) models which dynamically route work to expert subnetworks are notoriously communication-heavy. Rubin’s network and memory architecture lets teams train these models using far fewer GPUs, lowering capital and

operating costs.

3. Cloud Providers Can Offer More Powerful Instances

Major cloud platforms AWS, Google Cloud, Microsoft Azure, OCI, and specialist providers like CoreWeave and Lambda are already planning Rubin-based instances. These will enable customers to:

- Run massive reasoning models, not just traditional LLMs

- Handle multi-turn agentic AI

- Support longer token contexts without performance cliffs

- Deliver predictable latency at scale

This opens new use cases across enterprise AI, genomics, robotics, and real-time multimodal interfaces.

4. More Efficient AI Operations for Model Creators

For developers and researchers building large AI models:

✅ Lower infrastructure costs for both training and inference

✅ Easier scaling across racks without complex network engineering

✅ Better alignment with workload patterns — especially agentic and reasoning tasks

✅ Stronger security and multi-tenant isolation for enterprise deployments

This essentially shrinks the time to market for sophisticated AI products and enables new classes of models (e.g., reasoning agents, massive context models) that were previously too expensive to run at scale.

5. AI Native Storage & Memory Sharing

With BlueField-4 and new Inference Context Memory layers, Rubin accelerates the reuse and sharing of context data, a major bottleneck when hundreds of users or sessions need simultaneous access to massive reference memories. This matters for agents that learn over time or services that personalize across sessions.

Technical Comparison: Blackwell vs Rubin vs Feynman

Below is a chart blueprint you can use in slides or infographics. The numbers are based on official and widely reported platform data.

Performance & Memory (per full rack)

| Metric | Blackwell (NVL72) | Rubin (NVL144) | Rubin Ultra (2027) | Feynman (2028) |

| FP4 Inference | ~1.1 EFLOPS (dense) | ~3.6 EFLOPS (~3×) | ~15 EFLOPS | TBD (expected >15) |

| FP8 Training | ~0.36 EFLOPS | ~1.2 EFLOPS | ~5 EFLOPS | TBD |

| GPU Memory per GPU | 192 GB HBM3e | 288 GB HBM4 | 1 TB HBM4e | Expected ≥1 TB |

| Memory Bandwidth | ~8 TB/s | ~13–22 TB/s | >20 TB/s | Expected >20 TB/s |

| Interconnect | NVLink 5 / ~1.8 TB/s | NVLink 6 / ~3.6 TB/s | NVLink 6–7 dbl throughput | Likely next gen NVLink |

| CPU Integration | Grace | Vera (custom 88‑core) | Vera | Likely Vera‑based |

Notes:

- Rubin marks a shift to HBM4 memory and much higher interconnect throughput up to ~3.6 TB/s per GPU, roughly double Blackwell’s.

- Rubin Ultra (2027) expands memory to 1 TB HBM4e per GPU and multiplies compute dramatically.

- Feynman (2028) details are sparse but expected to succeed Rubin with further throughput and architectural gains.

Key Architectural Improvements

Memory Bandwidth Evolution

- Blackwell HBM3e: 8 TB/s

- Rubin HBM4: 13–22 TB/s (varies by config)

- Rubin Ultra HBM4e: ≥20 TB/s

This represents a 2×+ generational increase, correlating broader model contexts and larger inference batches.

Interconnect Throughput (per GPU)

- Blackwell NVLink: ~1.8 TB/s

- Rubin NVLink 6: ~3.6 TB/s

📊 Doubling interconnect bandwidth dramatically improves collective communication for large models especially Mixture‑of‑Experts (MoE) and reasoning workloads.

Rubin Use Cases, Explained in Depth

NVIDIA designed Rubin for AI‑factory workloads large, sustained, cross‑component tasks where communication and memory matter as much as raw compute.

A) Multimodal AI

Rubin’s huge memory pools and bandwidth make it ideal for models processing:

- Text + image + audio

- Long‑sequence reasoning

- Generative tasks with real‑time feedback

This benefits platforms like advanced chat agents, mixed‑media search engines, and real‑time translation.

Why it matters: Larger context windows and high memory access reduce off‑chip data transfers, a key limiter in multimodal scaling.

B) Reasoning & Agentic AI

Modern AI tasks like autonomous planning, long reasoning chains, and continuous stateful agents must:

- Maintain persistent context

- Share memory across sessions

- Synchronize models across chips

Rubin’s integrated memory system and rack‑scale coherence enable efficient state sharing crucial for agents like digital assistants, autonomous robotics, or personalized education models.

C) Robotics & Edge‑Cloud Synergy

While Rubin itself is a datacenter platform, it supports massive reasoning and long planning horizons (needed for robotics). Models trained/inferenced on Rubin can be distilled for edge deployment, enabling:

- Collaborative robots (cobots)

- Industrial automation reasoning

- Smart logistics systems

The combination of high memory bandwidth and GPU compute also accelerates simulation‑to‑reality workflows for robotics.

Rubin vs AMD AI Roadmap

AMD is not standing still at CES 2026, AMD unveiled Helios, a rack‑scale AI platform targeting exascale performance within a single rack.

AMD Helios / Instinct Path

- Helios rack system: ~3 AI exaflops per rack combining MI455X GPUs & EPYC “Venice” CPUs.

- AMD’s MI350/355 series shows competitive memory footprints (e.g., 288 GB HBM3) comparable with NVIDIA’s older platforms.

- AMD leverages Infinity Fabric and UALink for scaling, but lacks the deep NVLink‑style coherent interconnect that Rubin uses for seamless rack‑scale integration.

📊 Comparative Observations

| Aspect | NVIDIA Rubin | AMD Helios / Instinct |

| Interconnect | NVLink 6 (~3.6 TB/s), strong GPU‑GPU coherence | Infinity Fabric/UALink, emerging ecosystem |

| Memory (per GPU) | 288 GB HBM4 → 1 TB HBM4e (Ultra) | ~288 GB HBM3 (MI355) |

| Rack‑scale compute | Up to ~15 EFLOPS FP4 (Ultra) | ~3 Exaflops per rack (Helios) |

| Software ecosystem | CUDA + full AI ecosystem | ROCm + growing ecosystem |

| Cloud momentum | Broad hyperscaler adoption planned | Partnerships (e.g., open deals with OpenAI) |

Strategic Takeaways

Rubin’s strength lies in rack‑scale AI factories with coherent interconnects and memory remarkable for large reasoning and agentic AI.

AMD’s offering emphasizes memory‑rich architectures and openness (modular racks, hybrid deployments), appealing for flexible cloud environments and heterogeneous deployments.

What This Means for Cloud Providers & Model Creators

Cloud AI Providers

Rubin offers:

- Lower cost per token (claimed up to ~10× vs Blackwell) reducing inference and serving costs.

- Efficient scaling from single rack to hyper‑scale clusters.

- High memory & bandwidth for diverse workloads, from multimodal to real‑time reasoning.

Cloud ISVs will be able to:

- Build premium server instances optimized for reasoning tasks.

- Offer large context windows without prohibitive costs.

Model Creators

Artists of AI researchers and developers benefit because:

- Training MoE and reasoning models will require fewer GPUs with less communication overhead.

- Host inference tasks at scale with more users without latency cliffs.

- Use shared memory layers for persistent context across sessions (important for agentic AI).

Summary: Rubin’s Technical & Strategic Impact

| Area | Impact |

| Raw compute | ~50 PFLOPS per GPU, HBM4 memory |

| Interconnect | ~3.6 TB/s NVLink, low-latency collective ops |

| Networking | High-bandwidth, programmable NICs + Ethernet fabrics |

| Scalability | Rack = 1 unified supercomputer |

| Cost efficiency | 10× lower inference token cost |

| Cloud deployment | Rubin nodes in major hyperscalers |

| Model innovation | Supports massive context & reasoning models

|