On October 6, 2025, AMD and OpenAI announced a landmark multi-year, multi-generation strategic partnership aimed at deploying 6 gigawatts of AMD Instinct GPUs across OpenAI’s next-generation AI infrastructure. The initial phase targets the deployment of 1 gigawatt of AMD Instinct MI450 GPUs, with rollouts beginning in the second half of 2026.

This move marks a significant shift in the AI hardware ecosystem. Below, I break down what this means, why it’s important, and how companies in the AI infrastructure space (like ours) should respond.

Why This Partnership Matters

1. Massive Scale Commitment

Six gigawatts is no small number. This agreement signals that OpenAI is placing strong bets on AMD’s GPU roadmap for full-stack scaling of AI models and workloads.

2. Deepening Collaboration Across Generations

The partnership isn’t limited to one GPU generation. It starts with MI450, but it includes joint collaboration on hardware and software roadmaps going forward. This ensures alignment in architecture, driver support, ecosystem integrations, and optimization across future products.

3. Strategic Incentives and Alignment

As part of the deal, AMD granted OpenAI warrants for up to 160 million AMD common shares, with vesting tied to deployment milestones and performance targets.

This layer of financial alignment underscores how both companies see this not just as a supplier–customer relationship, but a partnership of shared risk and reward.

4. Ecosystem Benefits

One ripple effect of this partnership is that other AI model developers, cloud providers, and systems integrators will increasingly look to AMD’s Instinct line, expect optimized driver stacks, and push for software support and validation. This accelerates the broader AMD AI ecosystem (from low-level drivers to high-level frameworks).

What This Means for the AI Infrastructure Industry

Competitive Pressure on Other GPU Providers

With OpenAI anchoring a multi-gigawatt pact around AMD hardware, competing GPU and accelerator vendors will need to respond through tighter alliances, more aggressive roadmap execution, or differentiation in software and system-level integration.

Software & Stack Optimization Is Key

Hardware alone won’t win. The success of this collaboration depends heavily on co-design of compilers, runtime libraries, AI frameworks, and tooling to fully leverage the hardware capabilities.

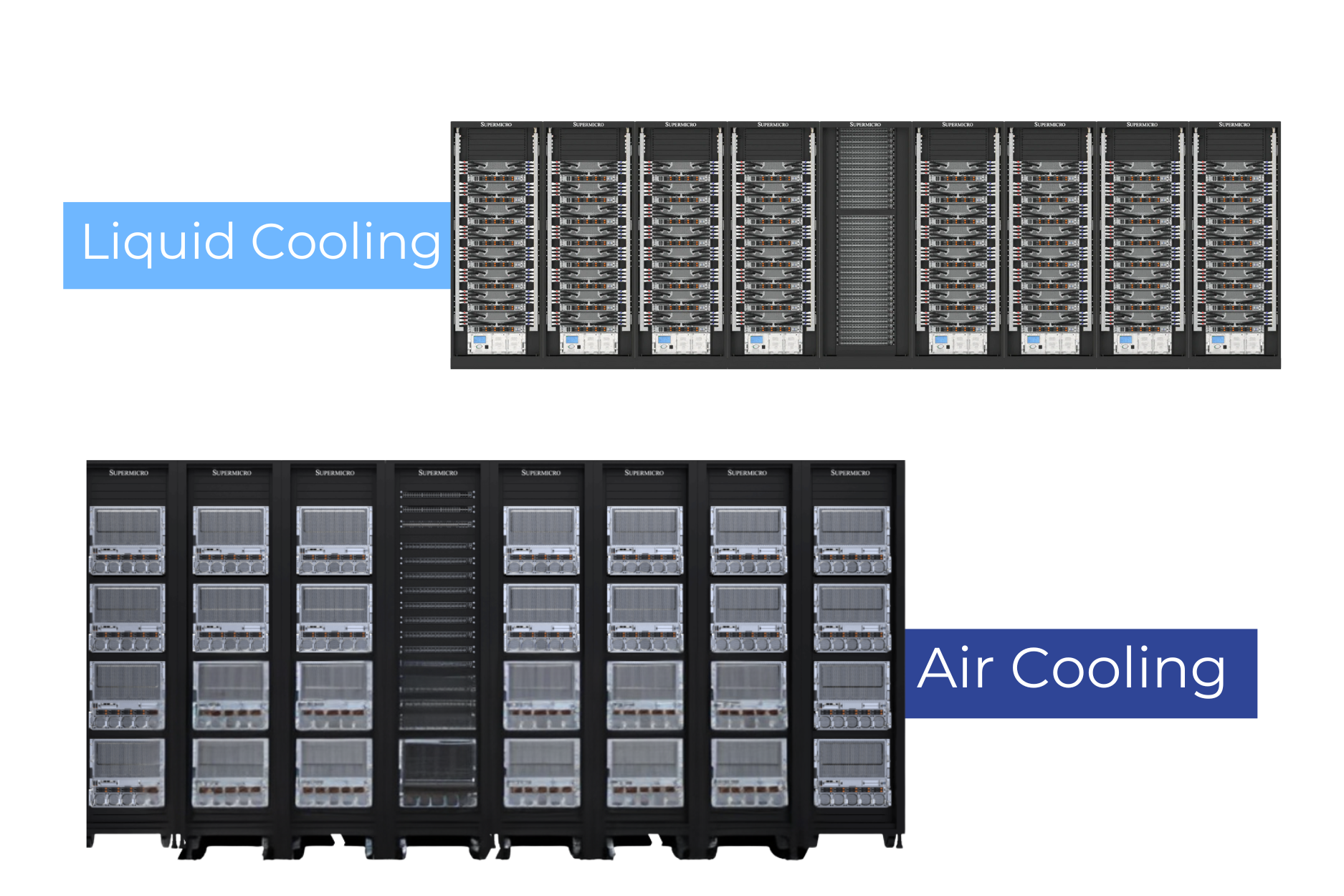

Supply Chain, Manufacturing & Yield Risks

Delivering gigawatt-scale GPU deployment places high demands on fabrication, packaging, memory supply, thermal design, yields, and logistics. From AMD’s side, ensuring consistent performance across many units will be essential.

New Business Models & Service Opportunities

As AI infrastructure scales, we may see more offerings for GPU-as-a-service, hybrid deployments, managed AI clusters, custom AI hardware consulting, and “AI infrastructure orchestration” as differentiators.

Ecosystem Strengthening

Because OpenAI is such a prominent AI player, its commitment to AMD can catalyze third-party tools, ISVs, model libraries, and performance benchmarks to converge toward AMD’s architecture, reinforcing its position in the AI compute stack.

How Companies Should Respond

1. Evaluate AMD GPU Options Now

Early benchmarking and pilot deployments with AMD Instinct (or earlier AMD architectures) can yield insight and positioning advantage.

2. Collaborate on Software Integration

Investing in software optimization, driver tuning, compiler support, and integration with AI frameworks will pay dividends as AMD hardware scales.

3. Design for Future Generations

Because the partnership is multi-generational, hardware and system architects should plan modularity, upgrade paths, and flexible system architectures that can evolve with successive AMD Instinct generations.

4. Strengthen Ecosystem Partnerships

Align with ISVs, system integrators, and cloud providers in the AMD ecosystem to create solution stacks, reference architectures, and validated deployments.

5. Stay Agile Amid Uncertainties

Despite the ambitious commitment, real-world deployment at this scale faces unknown risks, so maintain agility, track performance, and be ready to pivot or hedge where needed.

Looking Ahead

This AMD–OpenAI partnership ushers in a new era for AI compute infrastructure. With such scale and strategic alignment, we may see AI workloads migrate more heavily toward AMD platforms, and supporting tools and software converge accordingly.

At Vipera, we’re already preparing. In the coming months, Vipera is going to be expanding our Instinct offerings to cater to this new surge in the AMD ecosystem.